Tech

How the AI industry profits from catastrophe

Published

3 years agoon

By

Terry Power

It was meant to be a temporary side job—a way to earn some extra money. Oskarina Fuentes Anaya signed up for Appen, an AI data-labeling platform, when she was still in college studying to land a well-paid position in the oil industry.

But then the economy tanked in Venezuela. Inflation skyrocketed, and a stable job, once guaranteed, was no longer an option. Her side gig was now full time; the temporary now the foreseeable future.

Today Fuentes lives in Colombia, one of millions of Venezuelan migrants and refugees who have left their country in search of better opportunities. But she’s trapped at home—both by a chronic illness that developed after delayed access to health care and by opaque algorithms that dictate when she works and how much she earns.

Despite threats from Appen to retaliate against her, she chose to go on the record as a named source. She wants people to understand what her life is like to be a critical part of the global AI development pipeline yet for the beneficiaries of her work to also mistreat her and make her invisible. She wants the people who do this work to be seen.

Appen is among dozens of companies that offer data-labeling services for the AI industry. If you’ve bought groceries on Instacart or looked up an employer on Glassdoor, you’ve benefited from such labeling behind the scenes. Most profit-maximizing algorithms, which underpin e-commerce sites, voice assistants, and self-driving cars, are based on deep learning, an AI technique that relies on scores of labeled examples to expand its capabilities.

The insatiable demand has created a need for a broad base of cheap labor to manually tag videos, sort photos, and transcribe audio. The market value of sourcing and coordinating that “ghost work,” as it was memorably dubbed by anthropologist Mary Gray and computational social scientist Siddharth Suri, is projected to reach $13.7 billion by 2030.

Over the last five years, crisis-ridden Venezuela has become a primary source of this labor. The country plunged into the worst peacetime economic catastrophe facing a country in nearly 50 years right as demand for data labeling was exploding. Droves of well-educated people who were connected to the internet began joining crowdworking platforms as a means of survival.

“It was like a freak coincidence,” says Florian Alexander Schmidt, a professor at the University of Applied Sciences HTW Dresden who has studied the rise of the data-labeling industry.

Venezuela’s crisis has been a boon for these companies, which suddenly gained some of the cheapest labor ever available. But for Venezuelans like Fuentes, the rise of this fast-growing new industry in her country has been a mixed blessing. On one hand, it’s been a lifeline for those without any other options. On the other, it’s left them vulnerable to exploitation as corporations have lowered their pay, suspended their accounts, or discontinued programs in an ongoing race to offer increasingly low-cost services to Silicon Valley.

“There are huge power imbalances,” says Julian Posada, a PhD candidate at the University of Toronto who studies data annotators in Latin America. “Platforms decide how things are done. They make the rules of the game.”

To a growing chorus of experts, the arrangement echoes a colonial past when empires exploited the labor of more vulnerable countries and extracted profit from them, further impoverishing them of the resources they needed to grow and develop.

Now, as some platforms are turning their attention to other countries in search of even cheaper pools of labor, the model could continue to spread. What began in Venezuela set an expectation among players in the AI industry for how little they should have to pay for such services, and it created a playbook for how to meet the prices that clients have come to rely on.

“The Venezuela example made so clear how it’s a mixture of poverty and good infrastructure that makes this type of phenomenon possible,” Schmidt says. “As crises move around, it’s quite likely there will be another country that could fulfill that role.”

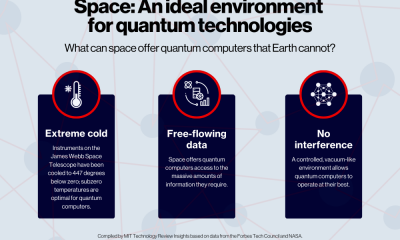

It was, of all things, the old-school auto giants that caused the data-labeling industry to explode.

German car manufacturers, like Volkswagen and BMW, were panicked that the Teslas and Ubers of the world threatened to bring down their businesses. So they did what legacy companies do when they encounter fresh-faced competition: they wrote blank checks to keep up.

The tech innovation of choice was the self-driving car. The auto giants began pouring billions into their development, says Schmidt, pushing the needs for data annotation to new levels.

Like all AI models built on deep learning, self-driving cars need millions, if not billions, of labeled examples to be taught to “see.” These examples come in the form of hours of video footage: every frame is carefully annotated to identify road markings, vehicles, pedestrians, trees, and trash cans for the car to follow or avoid. But unlike AI models that might categorize clothes or recommend news articles, self-driving cars require the highest levels of annotation accuracy. One too many mislabeled frames can be the difference between life and death.

For over a decade, Amazon’s crowdworking platform Mechanical Turk, or MTurk, had reigned supreme. Launched in 2005, it was the de facto way for companies to access low-wage labor willing to do piecemeal work. But MTurk was also a generalist platform: as such, it produced varied results and couldn’t guarantee a baseline of quality.

As deep learning began to take off in the early 2010s, a new generation of more specialized AI crowdworking platforms emerged, seeking to ensure better accuracy with a more hands-on approach to both the clients and workers. When the automakers came along in 2017, they wanted not just better performance but accuracy of 99% or more. MTurk fell out of favor, and the specialized platforms took over. Other older platforms, like Appen, adapted to the newer approach.

One of the most notable companies among the new specialized firms was (and still is) Scale AI. Founded in 2016 by Alexandr Wang, at the time a 19-year-old student at MIT, it quickly amassed tens of thousands of annotation workers and signed on big-name clients, which today include Toyota Research, Lyft, and OpenAI. Investors fawned: “If you could be pulling a rickshaw or labeling data in an air-conditioned internet café, the latter is a better job,” Mike Volpi, a general partner at Index Ventures, told Bloomberg in 2019 after joining several others in handing the company a total of $100 million. Scale is now valued at $7.3 billion. In February, it was selected among several companies to provide services to the US Department of Defense under a blanket purchase agreement of up to $249 million.

Scale’s early growth rested on its ability to provide high-quality labeled data rapidly and cheaply, thanks mainly to raw manpower. In 2017, it launched a worker-facing platform called Remotasks to build a global pool of cheap contractors.

For some tasks, Scale first runs client data through its own AI systems to produce preliminary labels before posting the results to Remotasks, where human workers correct the errors. For others, according to company training materials reviewed by MIT Technology Review, the company sends the data straight to the platform. Typically, one layer of human workers takes a first pass at labeling; then another reviews the work. Each worker’s pay is tied to speed and accuracy, which eggs them on to complete tasks more quickly yet fastidiously.

Initially, Scale sought contractors in the Philippines and Kenya. Both were natural fits, with histories of outsourcing, populations that speak excellent English and, crucially, low wages. However, around the same time, competitors such as Appen, Hive Micro, and Mighty AI’s Spare5 began to see a dramatic rise in signups from Venezuela, according to Schmidt’s research. By mid-2018, an estimated 200,000 Venezuelans had registered for Hive Micro and Spare5, making up 75% of their respective workforces.

To support MIT Technology Review’s journalism, please consider becoming a subscriber.

In 2019, Scale followed its competitors into Venezuela. After seeing its own uptick in signups from the country, company executives saw an opportunity to turn one of the world’s cheapest labor markets into a hub for its most intensive lidar annotation projects. It began aggressively recruiting Venezuelan workers, using referral codes and a social media marketing campaign that led people to believe they could make a lot of money.

The timing was fortuitous. Later that year, Uber acquired Mighty AI and restricted access to Spare5. Its labelers migrated to Remotasks in droves. Then in early 2020, in what it said was a way to help Venezuelans going through a historic hardship, Scale created a Venezuela-specific landing page for Remotasks and pushed users to join a new initiative called Remotasks Plus. The invitation-only program, which it later rolled out globally, promised participants a new opportunity to receive more training, increase their earnings through minimum hourly wages and bonuses, and seemingly advance inside the company.

Within a month, the onset of the global pandemic began driving up the program’s membership numbers. The new scheme firmly established Scale’s foothold in the country. Scale dominated as the go-to choice among prominent startups; Appen among tech giants like Google, YouTube, and Facebook; and Hive Micro among low-end clients with less stringent needs for quality.

The town where Fuentes lives is nestled in the mountains, a winding hour-long drive south of Colombia’s budding tech hub, Medellín. The 32-year-old shares an apartment with her husband, mom, aunt, uncle, and grandma, as well as her two dogs (“my kids,” she says).

The space doubles as her mom’s hair salon. As Fuentes, a diehard anime fan with pink and lavender hair, sets up her laptop in the living room, her mom gives a woman a haircut in the kitchen next to three other family members cooking lunch. The smaller of the two dogs, sporting a pink tutu and matching collar, settles down by Fuentes’s feet. Colorful paper flowers decorate the walls.

On her screen, a browser shows her running queue of tasks on Appen. Each displays a title and an anonymized client ID, as well as the number of units it’s divided into and how much she can earn—usually cents—per unit.

The tasks range widely, from image tagging to content moderation to product categorization (say, determining whether an object in a photo falls under the heading “jewelry,” “clothing,” or “bags”). This last task type has become so familiar that Fuentes no longer needs to translate the text from English to Spanish. For others, she uses Google Translate to understand.

To claim a task, she clicks in, and the system presents the client’s instructions. Sometimes they’re clear; sometimes they’re not. Sometimes there are none at all.

One task has proved impossible: her screen fills with a satellite image of a heavily forested area. There are no instructions—just a key that says “tree” and “not trees,” and a cursor that suggests she should be outlining the corresponding parts of the image. No matter what method she tries, her answer has been rejected every time. She’s convinced the client wants every tree—likely thousands—to be outlined individually.

As she completes a few of the easier tasks, a tally of her earnings in the top right corner creeps up in pennies. She can’t withdraw the money until it hits a $10 minimum, and then she must convert it into local currency. In Venezuela, this was complicated: most places don’t take payments from electronic wallets, and the black market to exchange them for local currency is filled with scams and high commissions. Now in Colombia, at least she can use PayPal.

She opens up another task she hasn’t been able to complete—this time in content moderation, and not for a client but as an assessment. If she passes, her task queue will start receiving more content moderation work, which is usually higher paying.

“Do these [social media videos] contain any crime or human rights violations?” it reads. Below, a series of video players with captions each have multiple-choice “yes” or “no” radio buttons.

(MIT Technology Review chose to redact the name of the social media platform after Appen spokesperson Christina Golden said the company could punish Fuentes if its client’s name appeared in this story).

The problem is the video players are broken and show up as dark blank rectangles. It’s clearly a bug, but her past experiences with Appen customer service suggest it isn’t worth the trouble to alert them. “Would you like to try?” she asks, hopeful that someone with better English might figure it out from just the captions. But they are vague and riddled with slang. The task is, once again, impossible.

In college, Fuentes studied oil and gas engineering at a time when Venezuela’s state-owned petroleum company was generating significant wealth for the country. She was a good student and landed an internship, then a return offer for a full-time job. She was well on her way to the Venezuelan dream.

But by the final year of her master’s program, the economy was already collapsing. Oil prices were falling, and the country’s nearly complete dependence on those revenues threatened its dramatic decline.

It was then that Fuentes learned about micro-working platforms and joined Appen, on advice from friends that “this one actually pays.” During breaks from writing her thesis, she squirreled away $10 to $15 a week in anticipation of the coming financial strain. Like many, she used an educational laptop that the government had issued to kids a few years earlier. Such programs were of a different era; those laptops have since been sold and resold among adults trying to access the digital economy.

By graduation, the crisis had deepened. As a result of the extraordinary hyperinflation, her return offer no longer covered basic living expenses, but no better jobs existed for students leaving university. She worried about her family’s safety if they stayed in the country; she wasn’t even sure they’d be able to afford food.

So in early 2019, with only enough money for a week of groceries, she and her husband crossed the border to Colombia, where she had dual citizenship. A generation earlier, in the same search for stability, her family had made the opposite journey, leaving Colombia for Venezuela to flee a different crisis.

The plan now was to start fresh. Instead they faced relentless reminders of the precariousness of their situation. A misunderstanding with their landlord nearly lost them their apartment. Then, as her husband struggled to get work authorization, Fuentes’s new employer, a local call center, announced that it would imminently be closing.

Under enormous stress, she barely thought twice when she began to experience intense physical discomfort, believing it would pass once the turmoil was over. But days after she started another call center job, she landed in the hospital for five days.

The doctor diagnosed acute diabetes and warned that it would kill her without immediate treatment. For a month after, she suffered debilitating cramps and lost her vision. When it came back, her mind instantly returned to how they would pay for her medication. So she pulled out her old educational laptop and began working on Appen full time.

The money, it turned out, was about the same amount she made at the call center—Wilson Pang, Appen’s CTO, says the company adjusts its pay per task to the minimum wage of each worker’s locale. But she could now stay home to rest more and take better care of herself, which included adhering to an intensive treatment regimen. She invested in a more powerful laptop to unlock higher-paying tasks like 3D lidar labeling for self-driving cars. She quickly made back the up-front costs and then some.

Fuentes smiles as she remembers this part of the story. With her husband employed and her earnings on Appen averaging $70 a week, she could finally breathe without constantly worrying about money. Those were the good days, she says, when—for just a fleeting moment—she felt she’d reached the end of a long and sunless tunnel.

For the majority of other Venezuelans, leaving the country was an impossibility. Those who turned to data annotation did so not just because they’d lost other jobs but because a wave of crime from increasing instability trapped them within their homes.

Working on the platforms became the full-time focus of many families, says Posada. Sometimes parents and children took turns on a shared computer; other times women took care of household chores so that the men of the household could work around the clock.

But as Fuentes would soon discover, the window of opportunity was getting smaller. Soon after Spare5 shuttered and the pandemic hit, the number of tasks on Appen began to dwindle as more and more workers joined the platform. Previously the task queue was reliably populated 24 hours a day, she says. Now it was increasingly empty, with work arriving erratically and at odd hours.

Whereas it was still enough to sustain her, users who joined later weren’t so lucky. Appen split its accounts into four levels. Users needed to complete tasks on levels 0 and 1 to a consistent standard before they could access additional jobs on levels 2 and 3.

Over time, lower-level tasks became nearly nonexistent, which meant creators of new accounts received negligible amounts of money. The only way to break in was to buy an existing high-level account in an underground market, but those who did so risked having their accounts shut down for violating company policy.

Golden says it has since moved away from this level-based model, but its projects still “have specific qualifications and therefore are not open to everyone.” “We pride ourselves on paying above minimum wage and adhere to our Crowd Code of Ethics,” she adds. “We hope that our platform can be a light for Venezuelans during the crisis and offer work to those who need it.”

This left Remotasks as the next best option. (While Hive Micro is the easiest service to join, it offers the most disturbing work—such as labeling terrorist imagery—for the most pitiful pay.) But no sooner had Remotasks Plus launched than the system started to show its cracks. Many users quickly realized that their hours were being undercounted, which lowered their weekly earnings. They were also held to higher standards, with greater risk of suspension for not being fast or precise enough.

“I realized that their approach was to drain each user as much as possible.”

Ricardo Huggines, a Venezuelan worker who joined Remo Plus to support his wife and kids

Matt Park, the senior vice president of operations at Scale, says Remotasks “invests heavily in training and support for all taskers,” including a 24/7 Spanish-speaking support team, training courses, live training sessions, and community discussion channels. “Remotasks Plus workers were provided additional training and support through a specialized boot camp training program,” he says. Yet workers found there wasn’t adequate support to help people meet the standards required.

A few months in, Remo Plus capped earnings: anyone who worked over 60 hours a week would not be paid for extra time. Meanwhile, Scale continued its publicity campaign, posting videos to YouTube, Facebook, and Instagram with testimonials and attractive stock footage showing stacks of US dollars.

“They promise stability, they sell you this like a long-term job, and they’re lying,” says a university student who worked on Remo Plus and asked to remain anonymous for fear of retaliation.

But when workers experienced frustrations, they found themselves unable to address them. Their main communication channels to the company were through trainers, community managers, and recruiters who were often also contractors of the company.

As a result, those people had neither ability nor incentive to advocate on their behalf. Workers who asked questions got silence, excuses, and mistreatment. Ricardo Huggines, a former computer engineer who began working on Remo Plus to support his wife and kids, says he was kicked out of the program after being too vocal about reduced payments and increased workloads.

“We take all worker complaints seriously and investigate allegations,” Park says. “Access may be revoked in instances such as consistent low performance or committing fraud or spam.”

As time went on, the program grew more disorganized. The platform was riddled with bugs and could crash, leaving people with incomplete tasks for which they were later punished. Scale also struggled to move money into Venezuela, at one point switching from PayPal to the digital wallet AirTM, which better supported bolivares, the local currency. In the Discord server that Scale originally set up exclusively for Venezuelan participants, which MIT Technology Review gained access to, workers often complained about payments being delayed for weeks or even months.

At the start of 2021, Scale slashed its bonuses and squeezed workers’ earnings even more. In April, it finally shut down Remo Plus entirely, migrating everyone back to the standard Remotasks platform. Many workers say they never received their final payout, though Park says the company records show “no outstanding payments or pay-related support inquiries from this program.” One worker showed MIT Technology Review screenshots of an eight-month-long payment dispute with customer service that the agent ultimately marked as resolved without her ever receiving her money.

Some workers heard rumors that the company had closed the program as punishment for people who’d taken advantage of the system. In Discord, Scale officially told workers the program had been an experiment and the experiment was now over.

For many, the whiplash disrupted their livelihood—and their family’s means of survival. “From the way they treated us, I realized that their approach was to drain each user as much as possible,” says Huggines, “and then dispose of them and bring new users in.”

These days, Fuentes waits anxiously by her computer, ready to start tasking at a moment’s notice. Some weeks, her hypervigilance leads to nothing; others she brings in a dismal $6 to $8, falling short of the threshold to withdraw her money. On occasion, a high-paying task appears, and she makes $300 in a few hours.

The windfalls come just often enough to make her average income tenable. But they’re also rare enough to keep her tethered to her computer. If a good task appears, there are only seconds to claim it, and she can’t afford to lose the opportunity. Once, while out on a walk, she missed a task that would have made her $100. Now she restricts her walks to weekends, having learned that clients usually post tasks during their working hours.

She vents her frustrations in Telegram and Discord groups of other Venezuelans on Appen. Members trade strategies and hacks for increasing their earnings. They also share tools developed by the community to make the work easier. Fuentes uses a number of these tools, including a browser extension that sounds an alarm when a new task appears. She keeps it on loud even when she sleeps, to wake her up in the middle of the night.

One group in particular has helped her significantly increase her earnings. Appen sends different work to different workers, basing the distribution on a host of signals including their location, speed, and proficiency. While those in the group don’t know the exact mechanism, they know they each receive different tasks. And as work on Appen began dwindling, they realized they could access one another’s.

The group now pools tasks together. Anytime a task appears in one member’s queue, that person copies the task-specific URL to everyone else. Anyone who clicks it can then claim the task as their own, even if it never showed up in their own queue. The system isn’t perfect. Each task has a limited number of units, such as the number of images that need to be labeled, which disappear faster when multiple members claim the same task in parallel. But Fuentes says so long as she’s clicked the link before it goes away, the platform will let her complete whatever units are left, and Appen will pay. “We all help each other out,” she says.

The group also keeps track of which client IDs should be avoided. Some clients are particularly harsh in grading task performance, which can cause a devastating account suspension. Nearly every member of the group has experienced at least one, Fuentes says. When it happens, you lose your access not only to new tasks but to any earnings that haven’t been withdrawn.

The time it happened to Fuentes, she received an email saying she had completed a task with “dishonest answers.” When she appealed, customer service confirmed it was an administrative error. But it still took months of pleading, using Google Translate to write messages in English, before her account was reinstated, according to communications reviewed by MIT Technology Review. (“We … have several initiatives in place to increase the response time,” Golden says. “The reality is that we have thousands of requests a day and respond based on priority.”)

Golden says Appen has seen an uptick in workers engaging in acts it considers “fraud,” such as using VPNs to locate themselves in higher-wage countries, which is why the company proactively looks for these behaviors and shuts down accounts deemed illegitimate. “Our support team is actively working with each contributor on their situation to rectify any misunderstandings,” she says. But workers say it’s precisely the platform’s unrealistic expectations and trigger-happy policies that push them to find creative workarounds.

Since the shutdown of Remo Plus, conditions on Remotasks have also gotten worse. Workers say the platform continues to be buggy and sometimes misleading, while payments have become more unreliable. Some can spend hours completing tasks to find they received only a fraction of the total amount listed on each job. Others say sudden power outages mid-task can erase their work and cost them the pay they would have earned. (“The platform is designed to autosave tasker work throughout the process,” Park says.)

“They treat us like we’re not human.”

Hossam Ashraf Esmael, a former North Africa-based community manager at Remotasks

As Remotasks has continued its global expansion, annotators in Venezuela have also grown increasingly suspicious that they’re being treated differently from counterparts in higher-income countries. Annotators in North Africa, where the platform has expanded in the last two years, say the same: Scale has reduced their pay by more than a third in a matter of months and withheld or even taken away earnings, leaving some workers with negative pending payments (in other words, they owe Scale money), according to screenshots provided to MIT Technology Review.

The workers in Venezuela and North Africa say the Filipino and European annotators they speak to have never experienced the same mistreatment. “Payments are determined on a project basis, not a geographical basis,” Park says, adding that “in rare cases, Remotasks has encountered bugs which resulted in inaccurate pay estimates being displayed.”

Scale also tried to prevent workers from resisting these changes. Recently when a group of North African annotators sought to fight drastic pay cuts, they faced retaliation. The company threatened to ban anyone engaging in “revolutions and protests,” according to screenshots from the project-specific Discord and eight workers who risked having their Remotasks accounts shut down to speak about their experiences. The workers say Scale also created a new quota system that removes workers from the project if they don’t complete a certain number of tasks within a given time. The workers estimate that around 20 of them have already been booted.

“They treat us like we’re not human,” says Hossam Ashraf Esmael, a former community manager at Remotasks, speaking on behalf of the eight workers, “like we don’t deserve to make enough money.”

“In February, pay rates for this project were updated to be aligned with the average payments for other similar Remotasks projects,” Park says. “Remotasks is committed to paying fair wages in every region we operate … We regularly conduct evaluations of and updates to our pay.”

MIT Technology Review created our own Remotasks account based in Venezuela to corroborate workers’ testimonies. The experience was confusing and unforgiving. Task instructions were difficult to understand, with pages and pages of technical information. A timer ticked away at the top left of the screen, without a clear deadline or apparent way to pause it to go to the bathroom. (Parks says this is an inactivity timer that returns a task back to the pool for someone else to claim if a worker leaves it incomplete for too long.) Three mistakes seemed to send us back to the instructions page. Sometimes the platform failed to load.

During the training, the materials showed a GIF of a woman showering in dollar bills. Above, it said in Spanish: “If you make high-quality annotations and carefully follow the rules of the project, you can get a high compensation.” After two hours of work, which included completing a tutorial and 20 tasks for a penny each, Andrea Paola Hernández, the Venezuela-based reporter on this article, earned 0.11 US dollars. Park says workers in Venezuela earn an average of a little more than 90 cents an hour.

Simala Leonard, a computer science student at the University of Nairobi who studies AI and worked several months on Remotasks, says the pay for data annotators is “totally unfair.” Google’s and Tesla’s self-driving-car programs are worth billions, he says, and algorithm developers who work on the technology are rewarded with six-figure salaries.

Meanwhile, the people who do “the most fundamental part of machine learning” are paid a pittance, he says. “Without the data labeled well, the models can’t predict properly.”

In parallel with the rise of platforms like Scale, newer data-labeling companies have sought to establish a higher standard for working conditions. They bill themselves as ethical alternatives, offering stable wages and benefits, good on-the-job training, and opportunities for career growth and promotion.

But this model still accounts for only a tiny slice of the market. “Maybe it improves the lives of 50 workers,” says Milagros Miceli, a PhD candidate at the Technical University of Berlin who studies two such companies, “but it doesn’t mean that this type of economy as it’s structured works in the long run.”

Such companies are also constrained by players willing to race to the bottom. To keep their prices competitive, the firms similarly source workers from impoverished and marginalized populations—low-income youth, refugees, people with disabilities—who remain just as vulnerable to exploitation, Miceli says.

This has been particularly evident during the pandemic, when some of these companies began to loosen their standards. They lowered their wages and lengthened working hours as clients tightened budgets and the market’s sudden oversupply of labor drove down the average cost of data annotation. It has affected employees like Jana, a Kenya-based worker who asked us not to use her real name and says her diminishing income no longer supports her child. She now juggles two jobs. By day, she works full time at a firm seen as a pioneer in ethical data labeling. By night, she logs on to Remotasks and works from 3 a.m. until morning. “Because of corona, you don’t have an option. You just hope for better days,” she says.

But those better days won’t come without coordinated international advocacy and regulation to limit how low the industry can go, Posada says: “Platforms can move. If not the Philippines, then Venezuela. If not Venezuela, then somewhere else.”

Indeed, Scale has continued to expand well beyond Venezuela. During the pandemic, it offered virtual boot camps across Asia, Latin America, sub-Saharan Africa, and the Arabic-speaking countries. According to web traffic data from traffic analyzer Semrush, the proportion of logins to Remotasks from Venezuela is falling.

Data from web advertising shows it’s also specifically targeting Kenya with paid ads and has been conducting in-person boot camps in Nairobi. “I guess they know that people here are struggling,” says Calvin Otieno, a Kenya-based worker who left the platform after four months because the pay was “very demoralizing.”

Fuentes fears a day when Appen could also abandon her. Despite the stress and hardship it has caused, she remains overwhelmingly grateful. “I’ve survived because of this platform,” she says back in her living room. “Other platforms have stopped paying, but Appen has always been there.”

At the same time, she wishes Appen’s leadership could see how dedicated its workers are and do more to take care of them. “I hope in four to five years, Appen can become a more traditional employer,” she says. “They know we exist, that we can get sick, that we need security and health care.”

“We are proud of our contributors and are working hard to improve internal processes to make it a better experience for them,” Golden says. “We want her to know that we recognize her and empathize with her situation.”

As the sun begins to set, Fuentes asks her uncle to snap a photo. Her smile beams through her mask as she cuddles her dog. After so many years serving the platform and its clients as an anonymous worker, she wants people to see her face and know her name.

A few weeks later, she sends the photo with a message: “Don’t forget us,” it says.

You may like

-

Modernizing the automotive industry: Creating a seamless customer experience

-

The Download: Russia’s crumbling tech industry, and an AI security disaster

-

How Russia killed its tech industry

-

Technology and industry convergence: A historic opportunity

-

Innovation in the space industry takes off

-

The Confederation of British Industry bets on high-quality data to underpin tech adoption

My senior spring in high school, I decided to defer my MIT enrollment by a year. I had always planned to take a gap year, but after receiving the silver tube in the mail and seeing all my college-bound friends plan out their classes and dorm decor, I got cold feet. Every time I mentioned my plans, I was met with questions like “But what about school?” and “MIT is cool with this?”

Yeah. MIT totally is. Postponing your MIT start date is as simple as clicking a checkbox.

COURTESY PHOTO

Now, having finished my first year of classes, I’m really grateful that I stuck with my decision to delay MIT, as I realized that having a full year of unstructured time is a gift. I could let my creative juices run. Pick up hobbies for fun. Do cool things like work at an AI startup and teach myself how to create latte art. My favorite part of the year, however, was backpacking across Europe. I traveled through Austria, Slovakia, Russia, Spain, France, the UK, Greece, Italy, Germany, Poland, Romania, and Hungary.

Moreover, despite my fear that I’d be losing a valuable year, traveling turned out to be the most productive thing I could have done with my time. I got to explore different cultures, meet new people from all over the world, and gain unique perspectives that I couldn’t have gotten otherwise. My travels throughout Europe allowed me to leave my comfort zone and expand my understanding of the greater human experience.

“In Iceland there’s less focus on hustle culture, and this relaxed approach to work-life balance ends up fostering creativity. This was a wild revelation to a bunch of MIT students.”

When I became a full-time student last fall, I realized that StartLabs, the premier undergraduate entrepreneurship club on campus, gives MIT undergrads a similar opportunity to expand their horizons and experience new things. I immediately signed up. At StartLabs, we host fireside chats and ideathons throughout the year. But our flagship event is our annual TechTrek over spring break. In previous years, StartLabs has gone on TechTrek trips to Germany, Switzerland, and Israel. On these fully funded trips, StartLabs members have visited and collaborated with industry leaders, incubators, startups, and academic institutions. They take these treks both to connect with the global startup sphere and to build closer relationships within the club itself.

Most important, however, the process of organizing the TechTrek is itself an expedited introduction to entrepreneurship. The trip is entirely planned by StartLabs members; we figure out travel logistics, find sponsors, and then discover ways to optimize our funding.

COURTESY PHOTO

In organizing this year’s trip to Iceland, we had to learn how to delegate roles to all the planners and how to maintain morale when making this trip a reality seemed to be an impossible task. We woke up extra early to take 6 a.m. calls with Icelandic founders and sponsors. We came up with options for different levels of sponsorship, used pattern recognition to deduce the email addresses of hundreds of potential contacts at organizations we wanted to visit, and all got scrappy with utilizing our LinkedIn connections.

And as any good entrepreneur must, we had to learn how to be lean and maximize our resources. To stretch our food budget, we planned all our incubator and company visits around lunchtime in hopes of getting fed, played human Tetris as we fit 16 people into a six-person Airbnb, and emailed grocery stores to get their nearly expired foods for a discount. We even made a deal with the local bus company to give us free tickets in exchange for a story post on our Instagram account.

Tech

The Download: spying keyboard software, and why boring AI is best

Published

2 years agoon

22 August 2023By

Terry Power

This is today’s edition of The Download, our weekday newsletter that provides a daily dose of what’s going on in the world of technology.

How ubiquitous keyboard software puts hundreds of millions of Chinese users at risk

For millions of Chinese people, the first software they download onto devices is always the same: a keyboard app. Yet few of them are aware that it may make everything they type vulnerable to spying eyes.

QWERTY keyboards are inefficient as many Chinese characters share the same latinized spelling. As a result, many switch to smart, localized keyboard apps to save time and frustration. Today, over 800 million Chinese people use third-party keyboard apps on their PCs, laptops, and mobile phones.

But a recent report by the Citizen Lab, a University of Toronto–affiliated research group, revealed that Sogou, one of the most popular Chinese keyboard apps, had a massive security loophole. Read the full story.

—Zeyi Yang

Why we should all be rooting for boring AI

Earlier this month, the US Department of Defense announced it is setting up a Generative AI Task Force, aimed at “analyzing and integrating” AI tools such as large language models across the department. It hopes they could improve intelligence and operational planning.

But those might not be the right use cases, writes our senior AI reporter Melissa Heikkila. Generative AI tools, such as language models, are glitchy and unpredictable, and they make things up. They also have massive security vulnerabilities, privacy problems, and deeply ingrained biases.

Applying these technologies in high-stakes settings could lead to deadly accidents where it’s unclear who or what should be held responsible, or even why the problem occurred. The DoD’s best bet is to apply generative AI to more mundane things like Excel, email, or word processing. Read the full story.

This story is from The Algorithm, Melissa’s weekly newsletter giving you the inside track on all things AI. Sign up to receive it in your inbox every Monday.

The ice cores that will let us look 1.5 million years into the past

To better understand the role atmospheric carbon dioxide plays in Earth’s climate cycles, scientists have long turned to ice cores drilled in Antarctica, where snow layers accumulate and compact over hundreds of thousands of years, trapping samples of ancient air in a lattice of bubbles that serve as tiny time capsules.

By analyzing those cores, scientists can connect greenhouse-gas concentrations with temperatures going back 800,000 years. Now, a new European-led initiative hopes to eventually retrieve the oldest core yet, dating back 1.5 million years. But that impressive feat is still only the first step. Once they’ve done that, they’ll have to figure out how they’re going to extract the air from the ice. Read the full story.

—Christian Elliott

This story is from the latest edition of our print magazine, set to go live tomorrow. Subscribe today for as low as $8/month to ensure you receive full access to the new Ethics issue and in-depth stories on experimental drugs, AI assisted warfare, microfinance, and more.

The must-reads

I’ve combed the internet to find you today’s most fun/important/scary/fascinating stories about technology.

1 How AI got dragged into the culture wars

Fears about ‘woke’ AI fundamentally misunderstand how it works. Yet they’re gaining traction. (The Guardian)

+ Why it’s impossible to build an unbiased AI language model. (MIT Technology Review)

2 Researchers are racing to understand a new coronavirus variant

It’s unlikely to be cause for concern, but it shows this virus still has plenty of tricks up its sleeve. (Nature)

+ Covid hasn’t entirely gone away—here’s where we stand. (MIT Technology Review)

+ Why we can’t afford to stop monitoring it. (Ars Technica)

3 How Hilary became such a monster storm

Much of it is down to unusually hot sea surface temperatures. (Wired $)

+ The era of simultaneous climate disasters is here to stay. (Axios)

+ People are donning cooling vests so they can work through the heat. (Wired $)

4 Brain privacy is set to become important

Scientists are getting better at decoding our brain data. It’s surely only a matter of time before others want a peek. (The Atlantic $)

+ How your brain data could be used against you. (MIT Technology Review)

5 How Nvidia built such a big competitive advantage in AI chips

Today it accounts for 70% of all AI chip sales—and an even greater share for training generative models. (NYT $)

+ The chips it’s selling to China are less effective due to US export controls. (Ars Technica)

+ These simple design rules could turn the chip industry on its head. (MIT Technology Review)

6 Inside the complex world of dissociative identity disorder on TikTok

Reducing stigma is great, but doctors fear people are self-diagnosing or even imitating the disorder. (The Verge)

7 What TikTok might have to give up to keep operating in the US

This shows just how hollow the authorities’ purported data-collection concerns really are. (Forbes)

8 Soldiers in Ukraine are playing World of Tanks on their phones

It’s eerily similar to the war they are themselves fighting, but they say it helps them to dissociate from the horror. (NYT $)

9 Conspiracy theorists are sharing mad ideas on what causes wildfires

But it’s all just a convoluted way to try to avoid having to tackle climate change. (Slate $)

10 Christie’s accidentally leaked the location of tons of valuable art

Seemingly thanks to the metadata that often automatically attaches to smartphone photos. (WP $)

Quote of the day

“Is it going to take people dying for something to move forward?”

—An anonymous air traffic controller warns that staffing shortages in their industry, plus other factors, are starting to threaten passenger safety, the New York Times reports.

The big story

Inside effective altruism, where the far future counts a lot more than the present

October 2022

Since its birth in the late 2000s, effective altruism has aimed to answer the question “How can those with means have the most impact on the world in a quantifiable way?”—and supplied methods for calculating the answer.

It’s no surprise that effective altruisms’ ideas have long faced criticism for reflecting white Western saviorism, alongside an avoidance of structural problems in favor of abstract math. And as believers pour even greater amounts of money into the movement’s increasingly sci-fi ideals, such charges are only intensifying. Read the full story.

—Rebecca Ackermann

We can still have nice things

A place for comfort, fun and distraction in these weird times. (Got any ideas? Drop me a line or tweet ’em at me.)

+ Watch Andrew Scott’s electrifying reading of the 1965 commencement address ‘Choose One of Five’ by Edith Sampson.

+ Here’s how Metallica makes sure its live performances ROCK. ($)

+ Cannot deal with this utterly ludicrous wooden vehicle.

+ Learn about a weird and wonderful new instrument called a harpejji.

Tech

Why we should all be rooting for boring AI

Published

2 years agoon

22 August 2023By

Terry Power

This story originally appeared in The Algorithm, our weekly newsletter on AI. To get stories like this in your inbox first, sign up here.

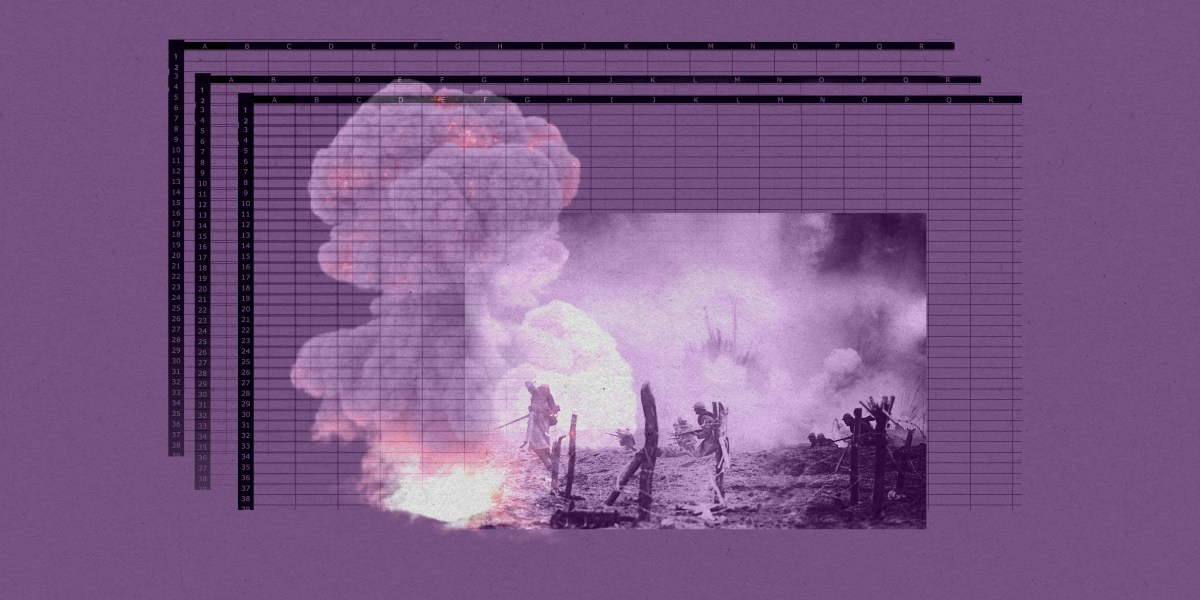

I’m back from a wholesome week off picking blueberries in a forest. So this story we published last week about the messy ethics of AI in warfare is just the antidote, bringing my blood pressure right back up again.

Arthur Holland Michel does a great job looking at the complicated and nuanced ethical questions around warfare and the military’s increasing use of artificial-intelligence tools. There are myriad ways AI could fail catastrophically or be abused in conflict situations, and there don’t seem to be any real rules constraining it yet. Holland Michel’s story illustrates how little there is to hold people accountable when things go wrong.

Last year I wrote about how the war in Ukraine kick-started a new boom in business for defense AI startups. The latest hype cycle has only added to that, as companies—and now the military too—race to embed generative AI in products and services.

Earlier this month, the US Department of Defense announced it is setting up a Generative AI Task Force, aimed at “analyzing and integrating” AI tools such as large language models across the department.

The department sees tons of potential to “improve intelligence, operational planning, and administrative and business processes.”

But Holland Michel’s story highlights why the first two use cases might be a bad idea. Generative AI tools, such as language models, are glitchy and unpredictable, and they make things up. They also have massive security vulnerabilities, privacy problems, and deeply ingrained biases.

Applying these technologies in high-stakes settings could lead to deadly accidents where it’s unclear who or what should be held responsible, or even why the problem occurred. Everyone agrees that humans should make the final call, but that is made harder by technology that acts unpredictably, especially in fast-moving conflict situations.

Some worry that the people lowest on the hierarchy will pay the highest price when things go wrong: “In the event of an accident—regardless of whether the human was wrong, the computer was wrong, or they were wrong together—the person who made the ‘decision’ will absorb the blame and protect everyone else along the chain of command from the full impact of accountability,” Holland Michel writes.

The only ones who seem likely to face no consequences when AI fails in war are the companies supplying the technology.

It helps companies when the rules the US has set to govern AI in warfare are mere recommendations, not laws. That makes it really hard to hold anyone accountable. Even the AI Act, the EU’s sweeping upcoming regulation for high-risk AI systems, exempts military uses, which arguably are the highest-risk applications of them all.

While everyone is looking for exciting new uses for generative AI, I personally can’t wait for it to become boring.

Amid early signs that people are starting to lose interest in the technology, companies might find that these sorts of tools are better suited for mundane, low-risk applications than solving humanity’s biggest problems.

Applying AI in, for example, productivity software such as Excel, email, or word processing might not be the sexiest idea, but compared to warfare it’s a relatively low-stakes application, and simple enough to have the potential to actually work as advertised. It could help us do the tedious bits of our jobs faster and better.

Boring AI is unlikely to break as easily and, most important, won’t kill anyone. Hopefully, soon we’ll forget we’re interacting with AI at all. (It wasn’t that long ago when machine translation was an exciting new thing in AI. Now most people don’t even think about its role in powering Google Translate.)

That’s why I’m more confident that organizations like the DoD will find success applying generative AI in administrative and business processes.

Boring AI is not morally complex. It’s not magic. But it works.

Deeper Learning

AI isn’t great at decoding human emotions. So why are regulators targeting the tech?

Amid all the chatter about ChatGPT, artificial general intelligence, and the prospect of robots taking people’s jobs, regulators in the EU and the US have been ramping up warnings against AI and emotion recognition. Emotion recognition is the attempt to identify a person’s feelings or state of mind using AI analysis of video, facial images, or audio recordings.

But why is this a top concern? Western regulators are particularly concerned about China’s use of the technology, and its potential to enable social control. And there’s also evidence that it simply does not work properly. Tate Ryan-Mosley dissected the thorny questions around the technology in last week’s edition of The Technocrat, our weekly newsletter on tech policy.

Bits and Bytes

Meta is preparing to launch free code-generating software

A version of its new LLaMA 2 language model that is able to generate programming code will pose a stiff challenge to similar proprietary code-generating programs from rivals such as OpenAI, Microsoft, and Google. The open-source program is called Code Llama, and its launch is imminent, according to The Information. (The Information)

OpenAI is testing GPT-4 for content moderation

Using the language model to moderate online content could really help alleviate the mental toll content moderation takes on humans. OpenAI says it’s seen some promising first results, although the tech does not outperform highly trained humans. A lot of big, open questions remain, such as whether the tool can be attuned to different cultures and pick up context and nuance. (OpenAI)

Google is working on an AI assistant that offers life advice

The generative AI tools could function as a life coach, offering up ideas, planning instructions, and tutoring tips. (The New York Times)

Two tech luminaries have quit their jobs to build AI systems inspired by bees

Sakana, a new AI research lab, draws inspiration from the animal kingdom. Founded by two prominent industry researchers and former Googlers, the company plans to make multiple smaller AI models that work together, the idea being that a “swarm” of programs could be as powerful as a single large AI model. (Bloomberg)