Tech

Security is everyone’s job in the workplace

Published

2 years agoon

By

Terry Power

Hackers around the globe are smart: they know that it isn’t just good code that helps them break into systems; it’s also about understanding—and preying upon—human behavior. The threat to businesses in the form of cyberattacks is only growing—especially as companies make the shift to embrace hybrid work.

But John Scimone, senior vice president and chief security officer at Dell Technologies, says “security is everyone’s job.” And building a culture that reflects that is a priority because cyber attacks are not going to decrease. He explains, “As we consider the vulnerability that industry and organizations face, technology and data is exploding rapidly, and growing in volume, variety, and velocity.” The increase in attacks means an increase in damage for businesses, he continues: “I would have to say that ransomware is probably the greatest risk facing most organizations today.”

And while ransomware isn’t a new challenge, it is compounded with the shift to hybrid work and the talent shortage experts have warned about for years. Scimone explains, “One of the key challenges we’ve seen in the IT space, and particularly in the security space, is a challenge around labor shortages.” He continues, “On the security side, we view the lack of cybersecurity professionals as one of the core vulnerabilities within the sector. It’s truly a crisis that both the public and private sectors have been warning about for years.”

However, investing in employees and building a strong culture can reap benefits for cybersecurity efforts. Scimone details the success Dell has seen, “Over the last year, we’ve seen thousands of real phishing attacks that were spotted and stopped as a result of our employees seeing them first and reporting them to us.”

And as much as organizations try to approach cybersecurity from a systemic and technical perspective, Scimone advises focusing on the employee, too: “So, training is essential, but again, it’s against the backdrop of a culture organizationally, where every team member knows they have a role to play.”

Show notes

Full transcript

Laurel Ruma: From MIT Technology Review, I’m Laurel Ruma, and this is Business Lab, the show that helps business leaders make sense of new technologies coming out of the lab and into the marketplace.

Our topic today is cybersecurity and the strain of the work-from-anywhere trend on enterprises. With an increase in cybersecurity attacks, the imperative to secure a wider network of employees and devices is urgent. However, keeping security top of mind for employees requires investment in culture as well. Two words for you. Secured workforce.

My guest is John Scimone, senior vice president and chief security officer at Dell Technologies. Prior to Dell, he served as the global chief information security officer for Sony Group.

This episode of Business Lab is produced in association with Dell Technologies.

Welcome, John.

John Scimone: Thanks for having me, Laurel. Good to be here.

Laurel: To start, how would you describe the current data security landscape, and what do you see as the most significant data security threat?

John: For anybody who can tune into a news outlet today, we see that these attacks are hitting closer to home, affecting public events this year, threatening to disrupt our food supply chain and utilities, and we see cyberattacks hitting organizations of all sizes and across all industries. When I think about the landscape of cyber risk, I decompose it into three areas. First, how vulnerable am I? Next, how likely am I to be hit by one of these attacks? And finally, so what if I do? What are the consequences?

As we consider the vulnerability that industry and organizations face, technology and data is exploding rapidly, and growing in volume, variety, and velocity. There’s really no sign of it stopping, and in today’s on-demand economy, nothing happens without data. Our recent Data Paradox study (that we did with Forrester) confirmed that businesses are overwhelmed by data. And that the pandemic has put additional strains on teams and resources—not just in the data they’re generating, where 44% of respondents said that the pandemic had significantly increased the amount of data they need to collect, store, and analyze—but also in the security implications of having more people working from home. More than half of the respondents have had to put emergency steps in place to keep data safe outside of the company network while people worked remotely.

We followed up with another study specifically on data protection against those backdrops. In this year’s global data protection index, we found that organizations are managing more than 10 times the amount of data that they did five years ago. Alarmingly, 82% of respondents are concerned that their organization’s existing data protection solutions won’t be able to meet all their future business challenges. And 74% believe that their organization has increased exposure to data loss from cyber threats, with the increase in the number of employees working from home.

Overall, we see that vulnerability is growing significantly. But what about likelihood? How likely are we to be hit by these things? As we think about likelihood, it’s really a question of how motivated and how capable the threats out there are. And from a motivation perspective, the risk to these criminals is low and the reward remains extremely high. Cyberattacks are estimated to cost the world trillions of dollars this year, and the reality is that very few criminals will face arrest or repercussions for it. And they’re becoming increasingly capable, and the tools and know-how to perpetrate these attacks are becoming more commoditized and widely available. The threats are growing in sophistication and prevalence.

Finally, from a consequences perspective, costs are continuing to rise when organizations are hit, whether the cost be brand reputational impact, operational outages, or impacts from litigation costs and fines. Our recent global data protection index shows that a million dollars was the average cost of data loss in the last 12 months. And a little over half a million dollars was the average cost to unplanned systems downtime over the last year. And there were numerous cases this year that were publicly reported where companies were facing ransom demands in excess of $50 million.

I worry that these consequences will only continue to grow. In light of this, I would have to say that ransomware is probably the greatest risk facing most organizations today. In reality, most companies remain vulnerable to it. It’s happening with increasing prevalence—some studies show as frequently as every 11 seconds a ransomware attack is happening—and consequences are rising, hitting some organizations to the tune of tens of millions of dollars of ransom demands.

Laurel: With the global shift to working anywhere and the increase of cybersecurity attacks in mind, what kinds of security risks do companies need to think about? And how are the attacks different or unusual from two or three years ago?

John: As we saw a mass mobility movement with many companies, employees shifting to remote work, we saw an increase in the amount of risk as organizations had employees using their corporate laptops and corporate systems outside of their traditional security boundaries. It’s unfortunately the case that we would see employees using their personal system for work purposes, and their work system for personal purposes. In reality, many organizations never designed from the get-go thinking about a mass mobility remote workforce. As a result, the vulnerability of these environments has increased significantly.

Additionally, as we think about how criminals operate, criminals feed on uncertainty and fear, regardless of whether it’s cybercrime or physical world crime, uncertainty and fear creates a ripe environment crime of all sorts. Unfortunately, both uncertainty and fear have been plentiful over the last 18 months. And we’ve seen that cyber criminals have capitalized on it, taking advantage of companies’ lack of preparedness, considering the speed of disruption and the proliferation of data that was taking place. It was an opportune environment for cybercrime to run rampant. In our own research, we saw that 44% of businesses surveyed have experienced more cyberattacks and data loss during this past year or so.

Laurel: Well, that’s certainly significant. So, what is it like now internally from an IT supports perspective—they have to support all of these additional nodes from people working remotely while also addressing the additional risks of social engineering and ransomware. How has that combination increased data security threats?

John: One interesting byproduct of the pandemic and of this massive shift to remote work is that it served as a significant accelerator for traditional IT initiatives. We saw an acceleration of digital transformation in IT initiatives that may previously have been planned or in-progress. But as you mentioned, resources are stretched. One of the key challenges we’ve seen in the IT space and particularly in the security space is a challenge around labor shortages. On the security side, we view the lack of cybersecurity professionals as one of the core vulnerabilities within the sector. It’s truly a crisis that both the public and private sectors have been warning about for years. In fact, there was a cybersecurity workforce study done last year by ISC2 that estimates we are 3.1 million trained cybersecurity professionals short of what industry actually needs to protect against cybercrime.

As we look forward, we estimate we’ll need to increase talent by about 41% in the US and 89% worldwide just to meet the needs of the digitally transforming society as these demands are rising. Labor is certainly a key piece of the equation and a concern from a vulnerability perspective. We look to start organizations off in a better position in this regard. We believe that building security, privacy, and resiliency into the offering should be central, starting from the design to manufacturing, all the way through a secure development process through supply chain, and following the data and applications everywhere they go. We call this strategy “intrinsic security,” and at its essence, it’s building security into the infrastructure and platforms that customers will use, therefore requiring less expertise to get security right.

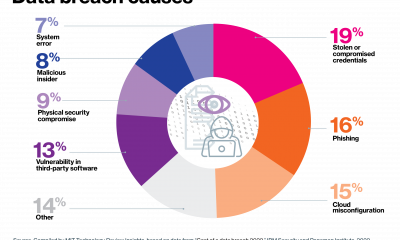

As you point out, the attacks are not slowing down. Social engineering, in particular, continues to be a top concern. For those unfamiliar with social engineering, it’s essentially when criminals try to trick employees into handing over information or opening up the door to let criminals into their system, such as through phishing emails, which we continue to see as one of the most popular methods used by hackers to get their first foot in the door into corporate networks.

Laurel: Is intrinsic security a lot like security by design, where products are intentionally built with a focus on security first, not security last?

John: That’s right. Security by design, privacy by design—and not just by design, but by default, getting it right, making it easy to do the right thing from a security perspective when considering using these technologies. It means an increase, of course, in security professionals across the company, but also ensuring security professionals are touching all of the offerings at every stage of the design and making sure that best practices are being instituted from the design, development, and manufacturing stages all the way through, even after they’re sold the services and support that follow them. We view this as a winning strategy in light of the challenges we see at scale, the challenges our customers are facing in finding the right cybersecurity talent to help them protect their organizations.

Laurel: I’m assuming Dell started thinking about this quite a while ago because the security hiring and rescaling challenges have been around for a while. And, as obviously the bad actors have become more proficient, it takes more and more good people to stop them. With that in mind, how do you feel the pandemic sped up that focus? Or is this something Dell saw coming?

John: At Dell, we’ve been investing in this area for a number of years. It’s clearly been a challenge, but as we’ve seen, it’s certainly accelerated and amplified the challenge and the impacts that our customers face. Therefore, it’s only more important. We’ve increased our investment in both security talent engineering and acumen over a number of years. And we’ll continue to invest, recognizing that, as it’s a priority for our customers, it’s a priority for us.

Laurel: That does make sense. On the other side of the coin, how is Dell ensuring employees

themselves take data protection seriously, and not fall for phishing attempts, for example? What kind of culture and mindset needs to be deployed to make security a company-wide priority?

John: It really is a culture at Dell, where security is everyone’s job. It’s not just my own corporate security team or the security teams within our product and offering groups. It touches every employee and every employee fulfilling their responsibility to help protect our company and protect our customers. We’ve been building over many years a culture of security where we arm our employees with the right knowledge and training so that they can make the right decisions, helping us thwart some of these criminal activities that we see, like all companies. One particular training program that’s been very successful has been our phishing training program. In this, we are continuously testing and training our employees by sending them simulated phishing emails, getting them more familiar with what to look for and how to spot phishing emails. Even just in this last quarter, we saw more employees spot and report the phishing simulation test than ever before.

These training activities are working, and they’re making a difference. Over the last year, we’ve seen thousands of real phishing attacks that were spotted and stopped as a result of our employees seeing them first and reporting them to us. So, training is essential, but again, it’s against the backdrop of a culture organizationally, where every team member knows they have a role to play. Even this month, as we look at October Cybersecurity Awareness Month, we’re amplifying our efforts and promoting security awareness and the responsibilities that team members have, whether it be how to securely use the VPN, securing their home network, or even how to travel securely. All of this is important, but it starts with employees knowing what to do, and then understanding it’s their responsibility to do so.

Laurel: And that shouldn’t be too surprising. Obviously, Dell is a large global company, but at the same time, is this an initiative that employees are starting to take a bit of pride in? Is there, perhaps, less complaining about, “Oh, I have to change my password yet again,” or, “Oh, now I have to sign into the VPN.”

John: One of the interesting byproducts of the increased attacks seen on the news every day is that they commonly now impact the everyday person at home. It’s affecting whether people can put food on the table and what type of food they can order and what’s available. Awareness has increased an incredible amount over the last couple of years. With that understanding of why this is important, we’ve seen a rise both in the attention and the pride by which the employees take this responsibility very seriously. We even have internal scoreboards. We make it a friendly competition where, organizationally, each team can see who’s finding the most security phishing tests. They love being able to help the company, and more importantly, help our customers in an additional way that goes beyond the important work they’re doing day to day in their primary role.

Laurel: That’s great. So, this is the question I like to ask security experts because you see so much. What kind of security breaches are you hearing about from customers or businesses around the industry, and what surprised you about these particular firsthand experiences?

John: It’s an unfortunate reality that we get calls pretty much every day from our customers who are unfortunately facing some of the worst days in their corporate experience, whether they’re in the throes of being hit by ransomware, dealing with some other type of cyber intrusion, dealing with data theft, or digital extortion, and it’s quite horrible to see. As I talk to our customers and even colleagues across industry, one of the common messages that rings true through all of these engagements is how they wish they had prepared a bit more. They wish they had taken the time and had the foresight to have certain safeguards in place, whether it be cyber-threat monitoring and detection capabilities, or increasingly with ransomware, more focused on having the right storage and data backups and protection in place, both in their core on-premise environment, as well as in the cloud.

But it has been surprising to me how many organizations don’t have truly resilient data protection strategies, given how devastating ransomware is. Many still think of data backups in the era of tornadoes and floods, where if you’ve got your backup 300 miles away from where you’ve got your data stored, then you’re good, your backups are safe. But people aren’t thinking about backups today that are being targeted by humans who literally find your backups wherever they are, and they seek to destroy them in order to make their extortion schemes more impactful. So, thinking through modern data backups and cyber resiliency in light of ransomware, it’s surprising to me how few are educated in thinking through this.

But I will say that with increasing prevalence, we’re having these conversations with customers, and customers are making the investments more proactively before that day comes and putting themselves on better footing for when it does.

Laurel: Do you feel that companies are thinking about data protection strategies differently now with the cloud? And what kinds of cloud tools and strategies will help companies keep their data secure?

John: It’s interesting because there’s a general realization that customer workloads and data are everywhere, whether it’s on premises, at the edge, or in public clouds. We believe a multi-hybrid cloud approach that includes the data center is one that offers consistency across all of the different environments as a best practice and how you think about treating your data protection strategies. Increasingly we see people taking a multi-cloud approach because of the security benefits that come with it, but also cost benefits, performance, compliance, privacy, and so on. What’s interesting is when we looked at our global data protection index findings, we learned that applications are being updated and deployed across a large range of cloud environments, and yet confidence is often lacking when it comes to how well the data can be protected. So, many organizations leverage multi-cloud infrastructure, deploy application workloads, but only 36% actually stated that they were confident in their cloud data protection capabilities.

By contrast, one-fifth of respondents indicated that they had some doubt or were not very or at all confident in their ability to protect data in the public cloud. I find this quite alarming, particularly when many organizations are using the public cloud to back up their data as part of their disaster recovery plans. They’re essentially copying all of their business data to a computing environment in which they have low confidence in the security. Organizations need to ensure they’ve got solutions in place to protect data in the multi-cloud and across their virtual workloads. From our perspective, we’re focused on intrinsic security, building the security resiliency and privacy into the solutions before they’re handed to our customers. The less customers have to think about security and find ways to staff their own hard-to-hire security experts, the better.

A couple other strategies to consider are, first, selecting the right partner. On average, we found the cost of data loss in the last year is approaching four times higher for organizations that are using multiple protection vendors as compared to those who are using a single vendor approach. Finally, and most importantly, everybody needs a data vault. A data vault that’s isolated off the network, that’s built with ransomware in mind to contend with the threats that we’re seeing. This is where customers can put their most critical data and have the confidence that they’re going to be able to recover their known good data when that day comes where data is really the lifeline that’s going to keep their business running.

Laurel: Is the data vault a hardware solution, a cloud solution, or a little bit of both? Maybe it depends on your business.

John: There’s certainly a number of different ways to architect it. In general, there are three key considerations when building a cyber-resilient data vault. The first is it has to be isolated. Anything that’s on the network is potentially exposed to risks.

Second is that it has to be immutable, which essentially means that once you back up the data, that backup can never be changed. Once it’s written onto the disc, you can never change it again. And third, and finally, it has to be intelligent. These systems have to be designed to be as intelligent, if not more intelligent, than the threats that are going to be undoubtedly coming after them. Designing these data backup systems with the threat environment in mind by experts who deeply understand security, deeply understand ransomware, is essential.

Laurel: I see. That sounds like how some three-letter government agencies work, offline with little access.

John: Unfortunately, that’s what the world has come to. Again, there’s really no sign of this changing. If we look at the incentives that cyber criminals face, the rewards are incredible. The repercussions are low. It’s really the largest, most beneficial criminal enterprise in the history of humankind in terms of what they’re likely to get out of an attack versus the likelihood that they’re going to get caught and go to jail. I don’t see that changing anytime soon. As a result, businesses need to be prepared.

Laurel: It’s certainly true. We don’t hear about all the attacks either, but when we do, there is a reputation cost there as well. I’m thinking about the attack earlier in the year at the water treatment plant in Florida. Do you expect more focused attacks on infrastructure because it’s seen as a way easy way in?

John: Unfortunately, this is not the problem of only one industry. Regardless of the nature of the business you’re running and the industry you’re in, when you look at your organization through the lens of a criminal, there’s often something to be had, whether it’s geopolitical incentives, the monetization of criminal fraud, or whether it’s stealing the data that you hold and reselling it on the black market. There are very few companies that truly can look at themselves and say, “I don’t have something that a cybercriminal would want.” And that’s something that every organization of all size needs to contend with.

Laurel: Especially as companies incorporate machine learning, artificial intelligence, and like you mentioned earlier, edge and IoT devices—there is data everywhere. With that in mind, as well as the multiple touchpoints you’re trying to secure, including your work-from-anywhere workforce, how can companies best secure data?

John: It’s a double-edged sword. The digital transformation, that first of all, Dell has been able to be witness to firsthand, has been incredible. What we’ve seen in terms of improvements in quality of life and the way society is transforming through emerging technologies like AI and ML, and the explosion of devices at the edge and IoT, the digital transformation and the benefits are tremendous. At the same time, it all represents potentially new risk if it’s invested in and deployed in a way that isn’t secure and isn’t well prepared for. In fact, we found with our full data protection index that 63% believe that these technologies pose a risk to data protection, that these risks are likely contributing to fears that organizations aren’t future ready, and that they may be at the risk of disruption over the course of the next year.

The lack of data protection solutions for newer technologies was actually one of the top three data protection challenges we found organizations citing when surveyed. Investing in these emerging technologies is essential for digitally transforming organizations, and organizations that are not digitally transforming are not likely to survive well in the era we’re looking at competitively. But at the same time, it’s critical that organizations ensure their data protection infrastructure is able to keep pace with their broader digital transformation and investment in these newer technologies.

Laurel: When we think about all of this in aggregate, are there tips you have for companies to future proof their data strategy?

John: There are certainly a few things that come to mind. First, it’s important to be continuously reflecting on priorities from a risk perspective. The reality is we can’t secure everything perfectly, so prioritization is critical. You have to ensure that you’re protecting what matters the most to your business. Performing regular strategic risk assessments and having those inform the investments and the priorities that organizations are pursuing is an essential backdrop against which you actually launch some of these security initiatives and activities.

The second thing that comes to mind is that practice makes perfect. Exercise, exercise, exercise. Can you ask yourself, could you really recover if you were hit with ransomware? How sure are you of that answer? We find that organizations that take the time to practice, do internal exercises, do mock simulations, go through the process of asking yourself those questions, do I pay the ransom? Do I not? Can I restore my backups? How confident am I that I can? Those that practice are much more likely to perform well when the day actually comes where they’re hit by one of these devastating attacks. Unfortunately, it’s increasingly likely that most organizations will face that day.

Finally, it’s critical that security strategies are connected to business strategies. Most strategies today from a business perspective, of course, will fail if the data that they rely on is not trusted and available. But cyber-resiliency efforts and security efforts can’t be enacted on an island of their own. They must be informed by and supportive of business strategy and priorities. I haven’t met a customer yet whose business strategy remains viable if they’re hit by ransomware or some other strategic data protection threat, and they’re not able to quickly and confidently restore their data. A core question to ask yourself is, how confident are you in your preparedness today in light of everything that we’ve been talking through? And how are you evolving your cyber-resiliency strategy to better prepare?

Laurel: That certainly is a key takeaway, right? It’s not just a technical problem or a technology problem. It’s also a business problem. Everyone has to participate in thinking about this data strategy.

John: Absolutely.

Laurel: Well, thank you very much, John. It’s been fantastic to have you today on the Business Lab.

John: My pleasure. Thank you for having me.

Laurel: That was John Scimone, the chief security officer at Dell Technologies, whom I spoke with from Cambridge, Massachusetts, the home of MIT and MIT Technology Review, overlooking the Charles River. That’s it for this episode of Business Lab. I’m your host, Laurel Ruma. I’m the Director of Insights, the custom publishing division of MIT Technology Review. We were founded in 1899 at the Massachusetts Institute of Technology. You can find us in-print, on the web, and at events each year around the world. For more information about us and the show, please check out our website at technologyreview.com.

This show is available wherever you get your podcasts. If you enjoyed this episode, we hope you’ll take a moment to rate and review us. This episode was produced by Collective Next. Business Lab is a production of MIT Technology Review. Thanks for listening.

This podcast episode was produced by Insights, the custom content arm of MIT Technology Review. It was not written by MIT Technology Review’s editorial staff.

You may like

-

Human-plus-AI solutions mitigate security threats

-

Job title of the future: metaverse lawyer

-

Got rejuvenation? Better call security

-

Bringing security out of the basement and into the boardroom with Darren Kane of nbn Australia

-

The Download: Russia’s crumbling tech industry, and an AI security disaster

-

Three ways AI chatbots are a security disaster

My senior spring in high school, I decided to defer my MIT enrollment by a year. I had always planned to take a gap year, but after receiving the silver tube in the mail and seeing all my college-bound friends plan out their classes and dorm decor, I got cold feet. Every time I mentioned my plans, I was met with questions like “But what about school?” and “MIT is cool with this?”

Yeah. MIT totally is. Postponing your MIT start date is as simple as clicking a checkbox.

COURTESY PHOTO

Now, having finished my first year of classes, I’m really grateful that I stuck with my decision to delay MIT, as I realized that having a full year of unstructured time is a gift. I could let my creative juices run. Pick up hobbies for fun. Do cool things like work at an AI startup and teach myself how to create latte art. My favorite part of the year, however, was backpacking across Europe. I traveled through Austria, Slovakia, Russia, Spain, France, the UK, Greece, Italy, Germany, Poland, Romania, and Hungary.

Moreover, despite my fear that I’d be losing a valuable year, traveling turned out to be the most productive thing I could have done with my time. I got to explore different cultures, meet new people from all over the world, and gain unique perspectives that I couldn’t have gotten otherwise. My travels throughout Europe allowed me to leave my comfort zone and expand my understanding of the greater human experience.

“In Iceland there’s less focus on hustle culture, and this relaxed approach to work-life balance ends up fostering creativity. This was a wild revelation to a bunch of MIT students.”

When I became a full-time student last fall, I realized that StartLabs, the premier undergraduate entrepreneurship club on campus, gives MIT undergrads a similar opportunity to expand their horizons and experience new things. I immediately signed up. At StartLabs, we host fireside chats and ideathons throughout the year. But our flagship event is our annual TechTrek over spring break. In previous years, StartLabs has gone on TechTrek trips to Germany, Switzerland, and Israel. On these fully funded trips, StartLabs members have visited and collaborated with industry leaders, incubators, startups, and academic institutions. They take these treks both to connect with the global startup sphere and to build closer relationships within the club itself.

Most important, however, the process of organizing the TechTrek is itself an expedited introduction to entrepreneurship. The trip is entirely planned by StartLabs members; we figure out travel logistics, find sponsors, and then discover ways to optimize our funding.

COURTESY PHOTO

In organizing this year’s trip to Iceland, we had to learn how to delegate roles to all the planners and how to maintain morale when making this trip a reality seemed to be an impossible task. We woke up extra early to take 6 a.m. calls with Icelandic founders and sponsors. We came up with options for different levels of sponsorship, used pattern recognition to deduce the email addresses of hundreds of potential contacts at organizations we wanted to visit, and all got scrappy with utilizing our LinkedIn connections.

And as any good entrepreneur must, we had to learn how to be lean and maximize our resources. To stretch our food budget, we planned all our incubator and company visits around lunchtime in hopes of getting fed, played human Tetris as we fit 16 people into a six-person Airbnb, and emailed grocery stores to get their nearly expired foods for a discount. We even made a deal with the local bus company to give us free tickets in exchange for a story post on our Instagram account.

Tech

The Download: spying keyboard software, and why boring AI is best

Published

9 months agoon

22 August 2023By

Terry Power

This is today’s edition of The Download, our weekday newsletter that provides a daily dose of what’s going on in the world of technology.

How ubiquitous keyboard software puts hundreds of millions of Chinese users at risk

For millions of Chinese people, the first software they download onto devices is always the same: a keyboard app. Yet few of them are aware that it may make everything they type vulnerable to spying eyes.

QWERTY keyboards are inefficient as many Chinese characters share the same latinized spelling. As a result, many switch to smart, localized keyboard apps to save time and frustration. Today, over 800 million Chinese people use third-party keyboard apps on their PCs, laptops, and mobile phones.

But a recent report by the Citizen Lab, a University of Toronto–affiliated research group, revealed that Sogou, one of the most popular Chinese keyboard apps, had a massive security loophole. Read the full story.

—Zeyi Yang

Why we should all be rooting for boring AI

Earlier this month, the US Department of Defense announced it is setting up a Generative AI Task Force, aimed at “analyzing and integrating” AI tools such as large language models across the department. It hopes they could improve intelligence and operational planning.

But those might not be the right use cases, writes our senior AI reporter Melissa Heikkila. Generative AI tools, such as language models, are glitchy and unpredictable, and they make things up. They also have massive security vulnerabilities, privacy problems, and deeply ingrained biases.

Applying these technologies in high-stakes settings could lead to deadly accidents where it’s unclear who or what should be held responsible, or even why the problem occurred. The DoD’s best bet is to apply generative AI to more mundane things like Excel, email, or word processing. Read the full story.

This story is from The Algorithm, Melissa’s weekly newsletter giving you the inside track on all things AI. Sign up to receive it in your inbox every Monday.

The ice cores that will let us look 1.5 million years into the past

To better understand the role atmospheric carbon dioxide plays in Earth’s climate cycles, scientists have long turned to ice cores drilled in Antarctica, where snow layers accumulate and compact over hundreds of thousands of years, trapping samples of ancient air in a lattice of bubbles that serve as tiny time capsules.

By analyzing those cores, scientists can connect greenhouse-gas concentrations with temperatures going back 800,000 years. Now, a new European-led initiative hopes to eventually retrieve the oldest core yet, dating back 1.5 million years. But that impressive feat is still only the first step. Once they’ve done that, they’ll have to figure out how they’re going to extract the air from the ice. Read the full story.

—Christian Elliott

This story is from the latest edition of our print magazine, set to go live tomorrow. Subscribe today for as low as $8/month to ensure you receive full access to the new Ethics issue and in-depth stories on experimental drugs, AI assisted warfare, microfinance, and more.

The must-reads

I’ve combed the internet to find you today’s most fun/important/scary/fascinating stories about technology.

1 How AI got dragged into the culture wars

Fears about ‘woke’ AI fundamentally misunderstand how it works. Yet they’re gaining traction. (The Guardian)

+ Why it’s impossible to build an unbiased AI language model. (MIT Technology Review)

2 Researchers are racing to understand a new coronavirus variant

It’s unlikely to be cause for concern, but it shows this virus still has plenty of tricks up its sleeve. (Nature)

+ Covid hasn’t entirely gone away—here’s where we stand. (MIT Technology Review)

+ Why we can’t afford to stop monitoring it. (Ars Technica)

3 How Hilary became such a monster storm

Much of it is down to unusually hot sea surface temperatures. (Wired $)

+ The era of simultaneous climate disasters is here to stay. (Axios)

+ People are donning cooling vests so they can work through the heat. (Wired $)

4 Brain privacy is set to become important

Scientists are getting better at decoding our brain data. It’s surely only a matter of time before others want a peek. (The Atlantic $)

+ How your brain data could be used against you. (MIT Technology Review)

5 How Nvidia built such a big competitive advantage in AI chips

Today it accounts for 70% of all AI chip sales—and an even greater share for training generative models. (NYT $)

+ The chips it’s selling to China are less effective due to US export controls. (Ars Technica)

+ These simple design rules could turn the chip industry on its head. (MIT Technology Review)

6 Inside the complex world of dissociative identity disorder on TikTok

Reducing stigma is great, but doctors fear people are self-diagnosing or even imitating the disorder. (The Verge)

7 What TikTok might have to give up to keep operating in the US

This shows just how hollow the authorities’ purported data-collection concerns really are. (Forbes)

8 Soldiers in Ukraine are playing World of Tanks on their phones

It’s eerily similar to the war they are themselves fighting, but they say it helps them to dissociate from the horror. (NYT $)

9 Conspiracy theorists are sharing mad ideas on what causes wildfires

But it’s all just a convoluted way to try to avoid having to tackle climate change. (Slate $)

10 Christie’s accidentally leaked the location of tons of valuable art

Seemingly thanks to the metadata that often automatically attaches to smartphone photos. (WP $)

Quote of the day

“Is it going to take people dying for something to move forward?”

—An anonymous air traffic controller warns that staffing shortages in their industry, plus other factors, are starting to threaten passenger safety, the New York Times reports.

The big story

Inside effective altruism, where the far future counts a lot more than the present

October 2022

Since its birth in the late 2000s, effective altruism has aimed to answer the question “How can those with means have the most impact on the world in a quantifiable way?”—and supplied methods for calculating the answer.

It’s no surprise that effective altruisms’ ideas have long faced criticism for reflecting white Western saviorism, alongside an avoidance of structural problems in favor of abstract math. And as believers pour even greater amounts of money into the movement’s increasingly sci-fi ideals, such charges are only intensifying. Read the full story.

—Rebecca Ackermann

We can still have nice things

A place for comfort, fun and distraction in these weird times. (Got any ideas? Drop me a line or tweet ’em at me.)

+ Watch Andrew Scott’s electrifying reading of the 1965 commencement address ‘Choose One of Five’ by Edith Sampson.

+ Here’s how Metallica makes sure its live performances ROCK. ($)

+ Cannot deal with this utterly ludicrous wooden vehicle.

+ Learn about a weird and wonderful new instrument called a harpejji.

Tech

Why we should all be rooting for boring AI

Published

9 months agoon

22 August 2023By

Terry Power

This story originally appeared in The Algorithm, our weekly newsletter on AI. To get stories like this in your inbox first, sign up here.

I’m back from a wholesome week off picking blueberries in a forest. So this story we published last week about the messy ethics of AI in warfare is just the antidote, bringing my blood pressure right back up again.

Arthur Holland Michel does a great job looking at the complicated and nuanced ethical questions around warfare and the military’s increasing use of artificial-intelligence tools. There are myriad ways AI could fail catastrophically or be abused in conflict situations, and there don’t seem to be any real rules constraining it yet. Holland Michel’s story illustrates how little there is to hold people accountable when things go wrong.

Last year I wrote about how the war in Ukraine kick-started a new boom in business for defense AI startups. The latest hype cycle has only added to that, as companies—and now the military too—race to embed generative AI in products and services.

Earlier this month, the US Department of Defense announced it is setting up a Generative AI Task Force, aimed at “analyzing and integrating” AI tools such as large language models across the department.

The department sees tons of potential to “improve intelligence, operational planning, and administrative and business processes.”

But Holland Michel’s story highlights why the first two use cases might be a bad idea. Generative AI tools, such as language models, are glitchy and unpredictable, and they make things up. They also have massive security vulnerabilities, privacy problems, and deeply ingrained biases.

Applying these technologies in high-stakes settings could lead to deadly accidents where it’s unclear who or what should be held responsible, or even why the problem occurred. Everyone agrees that humans should make the final call, but that is made harder by technology that acts unpredictably, especially in fast-moving conflict situations.

Some worry that the people lowest on the hierarchy will pay the highest price when things go wrong: “In the event of an accident—regardless of whether the human was wrong, the computer was wrong, or they were wrong together—the person who made the ‘decision’ will absorb the blame and protect everyone else along the chain of command from the full impact of accountability,” Holland Michel writes.

The only ones who seem likely to face no consequences when AI fails in war are the companies supplying the technology.

It helps companies when the rules the US has set to govern AI in warfare are mere recommendations, not laws. That makes it really hard to hold anyone accountable. Even the AI Act, the EU’s sweeping upcoming regulation for high-risk AI systems, exempts military uses, which arguably are the highest-risk applications of them all.

While everyone is looking for exciting new uses for generative AI, I personally can’t wait for it to become boring.

Amid early signs that people are starting to lose interest in the technology, companies might find that these sorts of tools are better suited for mundane, low-risk applications than solving humanity’s biggest problems.

Applying AI in, for example, productivity software such as Excel, email, or word processing might not be the sexiest idea, but compared to warfare it’s a relatively low-stakes application, and simple enough to have the potential to actually work as advertised. It could help us do the tedious bits of our jobs faster and better.

Boring AI is unlikely to break as easily and, most important, won’t kill anyone. Hopefully, soon we’ll forget we’re interacting with AI at all. (It wasn’t that long ago when machine translation was an exciting new thing in AI. Now most people don’t even think about its role in powering Google Translate.)

That’s why I’m more confident that organizations like the DoD will find success applying generative AI in administrative and business processes.

Boring AI is not morally complex. It’s not magic. But it works.

Deeper Learning

AI isn’t great at decoding human emotions. So why are regulators targeting the tech?

Amid all the chatter about ChatGPT, artificial general intelligence, and the prospect of robots taking people’s jobs, regulators in the EU and the US have been ramping up warnings against AI and emotion recognition. Emotion recognition is the attempt to identify a person’s feelings or state of mind using AI analysis of video, facial images, or audio recordings.

But why is this a top concern? Western regulators are particularly concerned about China’s use of the technology, and its potential to enable social control. And there’s also evidence that it simply does not work properly. Tate Ryan-Mosley dissected the thorny questions around the technology in last week’s edition of The Technocrat, our weekly newsletter on tech policy.

Bits and Bytes

Meta is preparing to launch free code-generating software

A version of its new LLaMA 2 language model that is able to generate programming code will pose a stiff challenge to similar proprietary code-generating programs from rivals such as OpenAI, Microsoft, and Google. The open-source program is called Code Llama, and its launch is imminent, according to The Information. (The Information)

OpenAI is testing GPT-4 for content moderation

Using the language model to moderate online content could really help alleviate the mental toll content moderation takes on humans. OpenAI says it’s seen some promising first results, although the tech does not outperform highly trained humans. A lot of big, open questions remain, such as whether the tool can be attuned to different cultures and pick up context and nuance. (OpenAI)

Google is working on an AI assistant that offers life advice

The generative AI tools could function as a life coach, offering up ideas, planning instructions, and tutoring tips. (The New York Times)

Two tech luminaries have quit their jobs to build AI systems inspired by bees

Sakana, a new AI research lab, draws inspiration from the animal kingdom. Founded by two prominent industry researchers and former Googlers, the company plans to make multiple smaller AI models that work together, the idea being that a “swarm” of programs could be as powerful as a single large AI model. (Bloomberg)